Saturday, April 23, 2016

Xdelta3 License Change

I have re-licensed Xdelta version 3.x under the terms of the Apache Public License (version 2).

The new sources live at http://github.com/jmacd/xdelta.

The original sources live at http://github.com/jmacd/xdelta-gpl.

The branches were based on the most recent (3.0.11 and 3.1.0) releases. I intend to maintain these, not those, going forward.

Friday, January 08, 2016

Releases 3.1.0 and 3.0.11 are available.

3.0.11 is a bug-fix release addressing a performance bug and several crashes in the decoder (discovered by afl-fuzz).

3.1.0 is a beta release, supports source-window buffer values (-B) greater than 2GB.

The 3.1.0 release uses a 64-bit hash function, currently performs 3-5% slower and has 1-2% worse compression. This will be addressed in future releases.

3.0.11 is a bug-fix release addressing a performance bug and several crashes in the decoder (discovered by afl-fuzz).

3.1.0 is a beta release, supports source-window buffer values (-B) greater than 2GB.

The 3.1.0 release uses a 64-bit hash function, currently performs 3-5% slower and has 1-2% worse compression. This will be addressed in future releases.

Saturday, July 25, 2015

Re: Release 3.0.10

Fixes XD3_TOOFARBACK #188.

Fixes XD3_TOOFARBACK #188.

New release script runs build & test on a variety of platforms, with and without XD3_USE_LARGEFILE64.

MinGW build & test in mainline: ./configure --host=x86_64-w64-mingw32.

Monday, March 30, 2015

Re: Release 3.0.9 on Github

Version 3.0.9 is a maintenance release. This release contains minor bug fixes and compiler / portability improvements.

The Github site is new. As explained in README, the site is still under construction; issues and wiki pages were transferred from code.google.com. Checkins for 3.0.9 and a new "64bithash" branch are in the development repository, for now.

(The new branch is aimed at supporting -B flag values greater than 2GB and will be released as 3.1.0 when it is passes testing.)

Version 3.0.9 is a maintenance release. This release contains minor bug fixes and compiler / portability improvements.

The Github site is new. As explained in README, the site is still under construction; issues and wiki pages were transferred from code.google.com. Checkins for 3.0.9 and a new "64bithash" branch are in the development repository, for now.

(The new branch is aimed at supporting -B flag values greater than 2GB and will be released as 3.1.0 when it is passes testing.)

The following public key may be used to verify future Xdelta releases.

-----BEGIN PGP PUBLIC KEY BLOCK-----Version: GnuPG v2

mQENBFUaKl8BCACtXVrndSXjMdbdqt2+EA5U7YFzqxUnBYGVNcd7Bg+F7lP0n5zp

r4odp7T0MVxcodpu3VpPcb64zNAnU0gWWg3NTIaNjV5LxTGz+ii9IFQiZRs33IR+

PiPsP0gl6OUSsf6KYAa5ploM1P3fWovDZ1QSGndSsCULCcSjOdEfC8vUKYebnDfh

ryoNQPcWtlgD0r0FFW79XGwLdPsbHyDX3TtWfxZHkp24Byl04gVg3GIfPHLL3Nyg

xnM97XPdW/u+zmgx++90QJ0jEm9+kFeLlLF2XuytrIcRPA7w5p9Mad8M41Fh19dn

+/fxz6Cnnm6vu8bzUDk20hntbXuuSmFFPqqRABEBAAG0KUpvc2ggTWFjRG9uYWxk

IDxqb3NoLm1hY2RvbmFsZEBnbWFpbC5jb20+iQE3BBMBCAAhBQJVGipfAhsDBQsJ

CAcCBhUICQoLAgQWAgMBAh4BAheAAAoJECue1N8Kv7ER3sQH/i8AHvnNX41Xs9uP

lkNyMl3jYsDyBktlx3gASUcI63BgYLBo88GxGfQzsKi3hmkJpl8LnZI0YQ6FBu8W

AQeur4LMd0phJGJLD1Ru7HNbA+IDRLWk+oWx7ZFfu4uRHj9hp4BqLGBjCjlIX9kP

jqUGpeDoO/oLEk2HkWq8QkbPTZhS8vZNudEmIGmpn2ZmVOzXxMF1FK/477caEwfY

7cx1ZazSTigCnTybELUCVYtSNv7G435nltR73r1uP9BOuR7leJY7vXiO+XEK5wSG

NTDwJe0kvdKIX4p3ykfgTy8Wn8TQDTS0Ab9qHPx1LKpm1gtr3n1+uK6xGWB0/9U9

SCxHhiK5AQ0EVRoqXwEIAMikTIsBGdyjgG7BuvkoJrOmSHNU+YU1qP3K+fdxIuTH

3Kr2qZ884ZsLVsJzF/VPrUHhWX1uvjZG20m5g7NNiKaKMzbgj9WfPlwVGb5+5tjd

ZKEfE88nacYRw/pXyWp3wcfcOlMZrUUJ3iaU7MNS7vdudNPzc+9cFXJajQjfLXxi

e0W8m+5YmrxSZPTIuT1V5qe+bHl4CRtmfHkzVlsiyoVz8Km+oaD+vj1VWndbI/YC

W9FzHquUdfX+BdtnAUdKTZbnbAwuNmmr6x56YrKsAvfoGbA3FbTljZZMm89vGZMp

e1B2HQddSIf8N2+bSFxDxzQbChCh5GUZHjapVaWoHV8AEQEAAYkBHwQYAQgACQUC

VRoqXwIbDAAKCRArntTfCr+xEf2FB/40SIxSC1oHSLp6Gl4bAj66f7RFX+KQxCVl

AaUbcr5gGY2JFGQwXjnKNdxp88tFnHSvjEbDptqgpgG4rEbrAJLjBdSRn3rRxGDv

K3Q7rCD2RZR5X+2wdB/bO2iHJFvDcBPqc7RVpFfRbZGuWEquwyIFMjowUf9ZBStP

oKkZTqYdn5iiZ8aXy13Jil83ZbaeKpLTxSj4gQFm2O/bP6CwFige2e0DBuUEMopD

Ozhqyo6S84yCTOEqVTudIK7TzH+ZluoNS0426kEmbJZ2L5eHlQeC6l9BXLBHBNbL

73v03V6uiL1mgqZomD9xsoo0mMVf3d0QWag/Mszv6po9iZA80t/v

=PyHl

-----END PGP PUBLIC KEY BLOCK-----

-----BEGIN PGP PUBLIC KEY BLOCK-----Version: GnuPG v2

mQENBFUaKl8BCACtXVrndSXjMdbdqt2+EA5U7YFzqxUnBYGVNcd7Bg+F7lP0n5zp

r4odp7T0MVxcodpu3VpPcb64zNAnU0gWWg3NTIaNjV5LxTGz+ii9IFQiZRs33IR+

PiPsP0gl6OUSsf6KYAa5ploM1P3fWovDZ1QSGndSsCULCcSjOdEfC8vUKYebnDfh

ryoNQPcWtlgD0r0FFW79XGwLdPsbHyDX3TtWfxZHkp24Byl04gVg3GIfPHLL3Nyg

xnM97XPdW/u+zmgx++90QJ0jEm9+kFeLlLF2XuytrIcRPA7w5p9Mad8M41Fh19dn

+/fxz6Cnnm6vu8bzUDk20hntbXuuSmFFPqqRABEBAAG0KUpvc2ggTWFjRG9uYWxk

IDxqb3NoLm1hY2RvbmFsZEBnbWFpbC5jb20+iQE3BBMBCAAhBQJVGipfAhsDBQsJ

CAcCBhUICQoLAgQWAgMBAh4BAheAAAoJECue1N8Kv7ER3sQH/i8AHvnNX41Xs9uP

lkNyMl3jYsDyBktlx3gASUcI63BgYLBo88GxGfQzsKi3hmkJpl8LnZI0YQ6FBu8W

AQeur4LMd0phJGJLD1Ru7HNbA+IDRLWk+oWx7ZFfu4uRHj9hp4BqLGBjCjlIX9kP

jqUGpeDoO/oLEk2HkWq8QkbPTZhS8vZNudEmIGmpn2ZmVOzXxMF1FK/477caEwfY

7cx1ZazSTigCnTybELUCVYtSNv7G435nltR73r1uP9BOuR7leJY7vXiO+XEK5wSG

NTDwJe0kvdKIX4p3ykfgTy8Wn8TQDTS0Ab9qHPx1LKpm1gtr3n1+uK6xGWB0/9U9

SCxHhiK5AQ0EVRoqXwEIAMikTIsBGdyjgG7BuvkoJrOmSHNU+YU1qP3K+fdxIuTH

3Kr2qZ884ZsLVsJzF/VPrUHhWX1uvjZG20m5g7NNiKaKMzbgj9WfPlwVGb5+5tjd

ZKEfE88nacYRw/pXyWp3wcfcOlMZrUUJ3iaU7MNS7vdudNPzc+9cFXJajQjfLXxi

e0W8m+5YmrxSZPTIuT1V5qe+bHl4CRtmfHkzVlsiyoVz8Km+oaD+vj1VWndbI/YC

W9FzHquUdfX+BdtnAUdKTZbnbAwuNmmr6x56YrKsAvfoGbA3FbTljZZMm89vGZMp

e1B2HQddSIf8N2+bSFxDxzQbChCh5GUZHjapVaWoHV8AEQEAAYkBHwQYAQgACQUC

VRoqXwIbDAAKCRArntTfCr+xEf2FB/40SIxSC1oHSLp6Gl4bAj66f7RFX+KQxCVl

AaUbcr5gGY2JFGQwXjnKNdxp88tFnHSvjEbDptqgpgG4rEbrAJLjBdSRn3rRxGDv

K3Q7rCD2RZR5X+2wdB/bO2iHJFvDcBPqc7RVpFfRbZGuWEquwyIFMjowUf9ZBStP

oKkZTqYdn5iiZ8aXy13Jil83ZbaeKpLTxSj4gQFm2O/bP6CwFige2e0DBuUEMopD

Ozhqyo6S84yCTOEqVTudIK7TzH+ZluoNS0426kEmbJZ2L5eHlQeC6l9BXLBHBNbL

73v03V6uiL1mgqZomD9xsoo0mMVf3d0QWag/Mszv6po9iZA80t/v

=PyHl

-----END PGP PUBLIC KEY BLOCK-----

Monday, January 13, 2014

Re: Release 3.0.8 (source) (win32) (x86-64)

Windows build now includes support for liblzma secondary compression with "-S lzma". (Sorry for the delay.)

(I would like to say not thank you to code.google.com for disabling download support two days hence.)

Windows build now includes support for liblzma secondary compression with "-S lzma". (Sorry for the delay.)

(I would like to say not thank you to code.google.com for disabling download support two days hence.)

Monday, January 21, 2013

Re: Release 3.0.6 (source) (win32) (win-x64)

This is a bug fix for a performance regression in 3.0.5, which I made available on November 12, 2012 without announcement. The 3.0.5 encoder would achieve poor compression for inputs larger than the source window, due to an improper fix for issue 149.

Please accept my apologies! This has demonstrated several needed improvements in the release process I'm using, which I'll work on putting in the next release.

This is a bug fix for a performance regression in 3.0.5, which I made available on November 12, 2012 without announcement. The 3.0.5 encoder would achieve poor compression for inputs larger than the source window, due to an improper fix for issue 149.

Please accept my apologies! This has demonstrated several needed improvements in the release process I'm using, which I'll work on putting in the next release.

Sunday, August 19, 2012

Re: 3.0.4

Includes bug-fixes for Windows, issues noted 143, 144, 145 (incorrect UNICODE setting).

Non-Windows build now includes support for liblzma secondary compression with "-S lzma". Windows liblzma support coming soon.

Includes bug-fixes for Windows, issues noted 143, 144, 145 (incorrect UNICODE setting).

Non-Windows build now includes support for liblzma secondary compression with "-S lzma". Windows liblzma support coming soon.

Sunday, July 15, 2012

Release 3.0.3 (source)

This release fixes issues with external compression and potential buffer overflows when using the -v setting (verbose output) with very large input files.

This release includes automake / autoconf / libtool support.

A sample iOS application is included, demonstrating how to use xdelta3 with the iPad / iPhone.

I've updated the 32-bit Windows binary here, I'll post an update when a 64-bit build is available.

This release fixes issues with external compression and potential buffer overflows when using the -v setting (verbose output) with very large input files.

This release includes automake / autoconf / libtool support.

A sample iOS application is included, demonstrating how to use xdelta3 with the iPad / iPhone.

I've updated the 32-bit Windows binary here, I'll post an update when a 64-bit build is available.

Saturday, January 08, 2011

Release 3.0.0 (source)

A minor change in behavior from previous releases. If you run xdelta3 on source files smaller than 64MB, you may notice xdelta3 using more memory than it has in the past. If this is an issue, lower the -B flag (see issue 113).

3.0 is a stable release series.

Monday, August 02, 2010

Release 3.0z (source)

This release includes both 32-bit and 64-bit Windows executables and adds support for reading the source file from a Windows named pipe (issue 101).

This release includes both 32-bit and 64-bit Windows executables and adds support for reading the source file from a Windows named pipe (issue 101).

External compression updates. 3.0z prints a new warning when it decompresses externally-compressed inputs, since I've received a few reports of users confused by checksum failures. Remember, it is not possible for xdelta3 to decompress and recompress a file and ensure it has the same checksum. 3.0z improves error handling for externally-compressed inputs with "trailing garbage" and also includes a new flag to force the external compression command. Pass '-F' to xdelta3 and it will pass '-f' to the external compression command.

Monday, February 15, 2010

Re: 3.0y (source)

Re: 3.0x (source)

Version3.0x 3.0y fixes several regressions introduced in 3.0w related to the new support for streaming the source file from a FIFO. This was a long-requested feature and I'm pleased to report that now, with the fixes in 3.0x 3.0y, it appears to be working quite well. The upshot of this feature is that you can encode or decode based on a compressed source file, without decompressing it to an intermediate file. In fact, you can expect the same compression with or without a streaming source file.

There were also reports of the encoder becoming I/O bound in previous releases, caused by the encoder improperly seeking backwards farther than the settings (namely, the -B flag) allowed. This is also fixed, and there's a new test to ensure it won't happen again.

Windows users: I need to investigate issue 101 before building a release of3.0x 3.0y. Until I can confirm that streaming-source-file support works on Windows, please continue using the 3.0u release.

Update: The built-in support for automatic decompression of inputs is interacting badly with the new source handling logic, results in poor compression.

Version

There were also reports of the encoder becoming I/O bound in previous releases, caused by the encoder improperly seeking backwards farther than the settings (namely, the -B flag) allowed. This is also fixed, and there's a new test to ensure it won't happen again.

Windows users: I need to investigate issue 101 before building a release of

Sunday, October 25, 2009

Re: 3.0w (source)

With such a good state of affairs (i.e., no bug reports), I was able to tackle a top-requested feature (59, 73). Many of you have asked to be able to encode deltas using a FIFO as the source file, because it means you can encode/decode from a compressed-on-disk source file when you don't have enough disk space for a temporary uncompressed copy. This is now supported, with one caveat.

When decoding with a non-seekable source file, the -B flag, which determines how much space is dedicated to its block cache, must be set at least as large as was used for encoding. If the decoder cannot proceed because -B was not set large enough, you will see:

xdelta3: non-seekable source: copy is too far back (try raising -B): XD3_INTERNAL

The stream->src->size field has been eliminated. Internally, a new stream->src->eof_known state has been introduced. This was a big improvement in code quality because, now, the source and target files are treated the same with respect to external (de)compression and several branches of code are gone for good.

Update Issue 95: command-line decode I/O-bound in 3.0w

With such a good state of affairs (i.e., no bug reports), I was able to tackle a top-requested feature (59, 73). Many of you have asked to be able to encode deltas using a FIFO as the source file, because it means you can encode/decode from a compressed-on-disk source file when you don't have enough disk space for a temporary uncompressed copy. This is now supported, with one caveat.

When decoding with a non-seekable source file, the -B flag, which determines how much space is dedicated to its block cache, must be set at least as large as was used for encoding. If the decoder cannot proceed because -B was not set large enough, you will see:

xdelta3: non-seekable source: copy is too far back (try raising -B): XD3_INTERNAL

The stream->src->size field has been eliminated. Internally, a new stream->src->eof_known state has been introduced. This was a big improvement in code quality because, now, the source and target files are treated the same with respect to external (de)compression and several branches of code are gone for good.

Update Issue 95: command-line decode I/O-bound in 3.0w

Wednesday, May 06, 2009

Re: MS "Linker Version Info" in 3.0u

I can't explain this problem report *SEE BELOW*, indicating that Xdelta on Microsoft platforms is slower than the previous build by a factor of two.

?

I can't explain this problem report *SEE BELOW*, indicating that Xdelta on Microsoft platforms is slower than the previous build by a factor of two.

?

Hello ... !

First of all I want to thank you for creating XDelta tool.

Realy nice and well documented stuff which sometimes helps a lot.

When I changed from v3.0t to v3.0u I've noticed some slowdown

in processing time. Well, it was just visual observation so I decided

to conduct a couple of tests. Here's what I've done.

Test was conducted on virtual memory disk using RAMDiskXP v2.0.0.100

from Cenatek Inc. It was done to exclude any impact from IO system.

Processing time was measured with Timer v8.00 tool by Igor Pavlov.

Test files size is about 275 MB. More exactly speaking 288 769 595

bytes for Test.dat.old and 288 771 262 bytes for Test.dat

Command line for XDelta is:

timer xdelta3 -v -9 -A= -B x -W x -I 0 -P x -s Test.dat.old Test.dat Diff.dat

where x is one of the values given below with the test results.

3.0t 3.0u

----- ----- --------------

16384 3.687 6.391 73.3% slower

65536 3.469 6.125 76.6% slower

1048576 2.578 5.453 111.5% slower

4194304 3.281 6.625 101.9% slower

8388608 3.953 7.718 95.2% slower

As you see v3.0u is averagely 91.7% SLOWER !!! I don't think it's a

some evil coincidence cause I redone every test twice. I have only one

clue. I see Linker Version Info in v3.0t exe-file is 8.0 while is 9.0

for the v3.0u exe-file so I suppose you changed or compilator itself

or its version.

I'll be very glad to hear from you.

Truly yours, ...

Wednesday, March 11, 2009

Re: 3.0v source release

I'm releasing SVN 281, which has an API fix (see issue 79). There's a new group for future announcements.

I'm releasing SVN 281, which has an API fix (see issue 79). There's a new group for future announcements.

Thursday, January 29, 2009

The wiki has great comments:

Answers to the final two questions:

Other top issues and requests:

Saturday, September 13, 2008

Re: Xdelta-3.0u (source)

2008.10.12 update: Windows executable posted

Release Notes:

A VCDIFF encoding contains two kinds of copy instructions: (1) copies from the source file, which the merge command can translate with straight-forward arithmetic, and (2) copies from the target file (itself), which are more difficult. Because a target copy can copy from another target copy earlier in the window, merging from a target copy at the end of a window may involve following a long chain of target copies all the way to the beginning of the window. The merge command implements this today using recursion, which is not an efficient solution. A better solution involves computing a couple of auxiliary data structures so that: (1) finding the origin of a target copy is a constant-time operation, (2) finding where (if at all) the origin of a target copy is copied by a subsequent delta in the chain. The second of these structures requires, essentially, a function to compute the inverse mapping of a delta, which is a feature that has its own applications.

Summary: the merge command handles target copies inefficiently, and the code to solve this problem will let us reverse deltas. Together, the merge and reverse commands make a powerful combination, making it possible to store O(N) delta files and construct deltas for updating between O(N^2) pairs using efficient operations. This was the basis of xProxy: A transparent caching and delta transfer system for web objects, which used an older version of Xdelta.

I'm making a source-only release, which I haven't done in a while, because I don't have the necessary build tools for Windows (due to a broken machine) and I don't want to delay this release because of it.

2008.10.12 update: Windows executable posted

Release Notes:

- New xdelta3 merge command (issue 36)

- Windows stdin/stdout-related fixes (issue 34)

- Fix API-only infinite loop (issue 70)

- Various portability and build fixes (gcc4, DJGPP, MinGW, Big-endian, Solaris, C++)

- New regression test (yeah!)

A VCDIFF encoding contains two kinds of copy instructions: (1) copies from the source file, which the merge command can translate with straight-forward arithmetic, and (2) copies from the target file (itself), which are more difficult. Because a target copy can copy from another target copy earlier in the window, merging from a target copy at the end of a window may involve following a long chain of target copies all the way to the beginning of the window. The merge command implements this today using recursion, which is not an efficient solution. A better solution involves computing a couple of auxiliary data structures so that: (1) finding the origin of a target copy is a constant-time operation, (2) finding where (if at all) the origin of a target copy is copied by a subsequent delta in the chain. The second of these structures requires, essentially, a function to compute the inverse mapping of a delta, which is a feature that has its own applications.

Summary: the merge command handles target copies inefficiently, and the code to solve this problem will let us reverse deltas. Together, the merge and reverse commands make a powerful combination, making it possible to store O(N) delta files and construct deltas for updating between O(N^2) pairs using efficient operations. This was the basis of xProxy: A transparent caching and delta transfer system for web objects, which used an older version of Xdelta.

Thursday, September 04, 2008

Re: Google's open-vcdiff

Google released a new open-source library for RFC 3284 (VCDIFF) encoding and decoding, designed to support their proposed Shared Dictionary Compression over HTTP (SDCH, a.k.a. "Sandwich") protocol.

This is great news. The author and I have had numerous discussions over a couple of features that VCDIFF lacks, and now that we have two open-source implementations we're able to make real progress on the standard. Both Google's open-vcdiff and Xdelta-3.x implement a extensions to the standard, but I ran a simple interoperability test and things look good. Run xdelta3 with "-n" to disable checksums and "-A" to disable its application header.

A big thanks to Lincoln Smith and Google's contributors. (Disclaimer: I am an employee of Google, but I did not contribute any code to open-vcdiff.)

P.S. I'm still debugging the xdelta3 merge command, stay tuned to issue 36.

Google released a new open-source library for RFC 3284 (VCDIFF) encoding and decoding, designed to support their proposed Shared Dictionary Compression over HTTP (SDCH, a.k.a. "Sandwich") protocol.

This is great news. The author and I have had numerous discussions over a couple of features that VCDIFF lacks, and now that we have two open-source implementations we're able to make real progress on the standard. Both Google's open-vcdiff and Xdelta-3.x implement a extensions to the standard, but I ran a simple interoperability test and things look good. Run xdelta3 with "-n" to disable checksums and "-A" to disable its application header.

A big thanks to Lincoln Smith and Google's contributors. (Disclaimer: I am an employee of Google, but I did not contribute any code to open-vcdiff.)

P.S. I'm still debugging the xdelta3 merge command, stay tuned to issue 36.

Wednesday, July 09, 2008

Re: Regression test framework

3.0t released in December 2007 has turned out to be extremely stable, and I'm busy preparing the first non-beta release candidate. The problem with being stable is the risk of regression, so I've been nervously putting together a new regression testing framework to help exercise obscure bugs.

The most critical bug reported since 3.0t is a non-blocking API-specific problem. Certain bugs only affect the API, not the xdelta3 command-line application, because the command-line application uses blocking I/O even though the API supports non-blocking I/O. Issue 70 reported an infinite loop processing a certain pair of files. The reporter graciously included sample inputs to reproduce the problem, and I fixed it in SVN 239.

But I wasn't happy with the fix until now, thanks to the new regression testing framework. With ~50 lines of code, the test creates an in-memory file descriptor, then creates two slight variations intended to trigger the bug. SVN 256 contains the new test.

So just what is it that makes me feel 3.x is so stable? It's e-mails like this from Alex White at intralan.co.uk:

3.0t released in December 2007 has turned out to be extremely stable, and I'm busy preparing the first non-beta release candidate. The problem with being stable is the risk of regression, so I've been nervously putting together a new regression testing framework to help exercise obscure bugs.

The most critical bug reported since 3.0t is a non-blocking API-specific problem. Certain bugs only affect the API, not the xdelta3 command-line application, because the command-line application uses blocking I/O even though the API supports non-blocking I/O. Issue 70 reported an infinite loop processing a certain pair of files. The reporter graciously included sample inputs to reproduce the problem, and I fixed it in SVN 239.

But I wasn't happy with the fix until now, thanks to the new regression testing framework. With ~50 lines of code, the test creates an in-memory file descriptor, then creates two slight variations intended to trigger the bug. SVN 256 contains the new test.

So just what is it that makes me feel 3.x is so stable? It's e-mails like this from Alex White at intralan.co.uk:

- Hi Josh,

FYI:-

Been using XDelta for a while now, been working flawlessly (I wish all software could be this stable), been patching up to 1tb of data per day (across many servers), largest single file to date 70gb!!!

Did some performance testing and with standard SATAII drives with both sources and the patch being created on the same drive the processing was around 300mb per minute (creating patches), setup a dual drive configuration where one of the sources was on a second drive and then the processing was around 1gb per minute (creating patches) .

Best large file patch to original file size ratio,

Original file size: 56,147,853,312

Patch file size: 299,687,049

Patch file reduction over original file size 99.47%

This file took 2 hours and 21 minutes to patch in a real world setting.

Monday, April 21, 2008

Update for April 2008:

I started making beta releases for Xdelta-3.x four years ago. There were a lot of bugs then. Now the bug reports are dwindling, so much so that I've had a chance to work on new features, such as one requested by issue 36. Announcing the xdelta3 merge command. Syntax:

I started making beta releases for Xdelta-3.x four years ago. There were a lot of bugs then. Now the bug reports are dwindling, so much so that I've had a chance to work on new features, such as one requested by issue 36. Announcing the xdelta3 merge command. Syntax:

xdelta3 merge -m input0 [-m input1 [-m input2 ... ]] inputN outputThis command allows you to combine a sequence of deltas, producing a single delta that combines the effect of applying two or more deltas into one. Since I haven't finished testing this feature, the code is only available in subversion. See here and enjoy.

Thursday, December 06, 2007

Xdelta-3.0t release notes:

- Improves compression by avoiding inefficient small copies (e.g., copies of length 4 at a distance >= 2^14 are skipped)

- Fixes an uninitialized array element in -S djw which did not cause a crash, but caused less than ideal compression and test failures

- Fixes bugs in xdelta3 recode, tests added

- All tests pass under Valgrind

Thursday, November 08, 2007

Xdelta-3.0s release notes:

- Faster! Several optimizations to avoid unnecessary arithmetic

- xdelta3 -1 has faster/poorer compression (xdelta3 -2 is the former configuration)

- -S djw exposes secondary compression levels as -S djw1 .. -S djw9

- Removes "source file too large for external decompression" error check

- API support for transcoding other formats into VCDIFF

- Fixes an encoder crash caused by -S djw (secondary compressor) on certain data, new tests for code-length overflow

- Adds new recode command for re-encoding a delta with different secondary compression settings

- Fixes API-specific bugs related to non-blocking calls to xd3_encode_input and xd3_decode_input

- Adds new examples/encoder_decoder_test.c program for the non-blocking API

$ ./xdelta3 -S none -s xdelta3.h xdelta3.c OUT # 53618 bytesSecondary compression remains off by default. Passing -S djw is equivalent to -S djw6.

$ ./xdelta3 recode -S djw1 OUT OUT-djw1 # 51023 bytes

$ ./xdelta3 recode -S djw3 OUT OUT-djw3 # 47729 bytes

$ ./xdelta3 recode -S djw6 OUT OUT-djw6 # 45946 bytes

$ ./xdelta3 recode -S djw7 OUT OUT-djw7 # 45676 bytes

$ ./xdelta3 recode -S djw8 OUT OUT-djw8 # 45658 bytes

$ ./xdelta3 recode -S djw9 OUT OUT-djw9 # 45721 bytes

Wednesday, July 18, 2007

I'm happy about an e-mail from a manager at Pocket Soft, clarifying what was written in my previous post. Obviously, Pocket Soft deserves recognition here because, commercially speaking, they're the only basis for comparison sent by users. I am posting the entire content here.

Re: Patents and the Oracles of the world

The threat model is: I will sell a software license excluding you from the (copyright-related) terms of the GPL, giving you unlimited use for a flat fee, but it's done without representations, warranties, liabilities, indemnities, etc. The argument is, your company could be sued over intellectual property rights if any of the following technologies and programs should fall to a claim (although they have never to my knowledge been in doubt): Zlib-1.x, Xdelta-1.x, and the draft-standard RFC 3284 (VCDIFF).

I will say this:

Re: Support

In the unlikely event that you find an Xdelta crash or incorrect result, I'm really interested in fixing it. I keep track of issues. I respond to e-mail, like this one about directory-diff support:

- Hi, Josh. I'm the Manager of Software Engineering at Pocket Soft, makers of RTPatch. I'd like to address a misquote contained in your June 28th post and hopefully clear the air and explain Pocket Soft's official position with respect to open source, patents and head-to-head comparison with XDelta. I'm happy for you to use all or part of my email within your site, if you feel it is appropriate.

First of all, the quote "xdelta likely infringes on our patents as well" is inaccurate (nobody from Pocket Soft made that specific remark to anyone at anytime); however, it does embody the essence of a statement made by one of our salesmen to a company in Australia that could have been *construed* to suggest a patent infringement claim. This was a one-time incident, and was not representative of Pocket Soft's corporate position. In fact, our CEO spoke to the CEO of the Australian outfit who received this message and explained that it was the result of an overzealous salesman. We have also addressed this issue internally with all staff.

In fact, Pocket Soft is a supporter of open source software, and understand that it serves a great purpose in our industry. Further, having spent the last 15+ years on the specific problems associated with byte-level differencing, we have a great appreciation for the work that you have done on XDelta. The purpose of patents for Pocket Soft's use are in fact defensive and to serve as a sales "check list" for our Enterprise customers. Consider the following [article].

In particular, the section of "Legal Risk" comes into play frequently with the OEM and even shrink-wrap sales we make to Enterprise. When you are selling to the Oracles of the world, we are almost always asked "what about indemnity?". The existence of a patent on the core technology helps to alleviate fears of legal risk - regardless of your, or even our own, opinions on software patents themselves.

Regarding head-to-head comparison, we are well aware of specific places where we could improve speed or patch file size in RTPatch to gain a few points here and there; however, the needs of our customers regarding reliability trump that. Your reader wrote that they had a 4.5 year old version of RTPatch. Some of our users cling to 12 year old versions fearing the possibility of destabilization with a new release. Our Enterprise customers like to hear that we can claim with absolute sincerity that the current patch file format, Build engine, etc., have been thoroughly tested and that the same algorithms are being used for the million+ patches applied every single day by users of RTPatch (McAfee and Trend Micro alone put out that many patches). When the US Navy began using RTPatch for their digitized nautical charts used by ships at sea, they cared less about gaining a few more points on size reduction or even speed of Build. They needed

reliability and robustness.

This is not to say that your product lacks these characteristics. I am speaking only to the issues that we are faced with daily by our customers and potential customers. We also have to deal with the extended feature-set of RTPatch, including support for Windows Installer, automatic patch delivery mechanisms, as well as a host of Windows-specific features and options (self-registering files, registry support, etc.). The core algorithm is, of course, important, but in our market, there is much more than just byte-level differencing at play.

Finally, on a personal note, I wanted to say that we're not a big, bad corporation out to squash or bully innovators of open source software. On the contrary, we appreciate the attention you draw to yourself, which in turns brings companies who are in our market, to the attention of RTPatch. I understand that you work at Google now, so I'm sure that you know that not *all* companies are evil. :)

I hope you took the time to read this far. I certainly appreciate the opportunity to clear the air. Feel free to call or email anytime, and I'd be happy to chat.

Best of luck to you,

Tony O-

Manager, Software Engineering

Pocket Soft, Inc.

713-460-5600

Re: Patents and the Oracles of the world

The threat model is: I will sell a software license excluding you from the (copyright-related) terms of the GPL, giving you unlimited use for a flat fee, but it's done without representations, warranties, liabilities, indemnities, etc. The argument is, your company could be sued over intellectual property rights if any of the following technologies and programs should fall to a claim (although they have never to my knowledge been in doubt): Zlib-1.x, Xdelta-1.x, and the draft-standard RFC 3284 (VCDIFF).

I will say this:

- In the event [Company] discovers that Licensor is not the legal owner and title holder of any code sequence within Xdelta delivered under this Agreement, [Company] shall notify Licensor of such discovery in writing, including the specific software code sequence in question, and shall give Licensor One Hundred Eighty (180) days to replace same with a functionally equivalent code sequence that Licensor is the legal owner and title holder of, all prior to invoking any form of relief which may be permitted under this Agreement. (contact me for a sample license)

Re: Support

In the unlikely event that you find an Xdelta crash or incorrect result, I'm really interested in fixing it. I keep track of issues. I respond to e-mail, like this one about directory-diff support:

- hi, i'm using your AMAZING(!) xdelta 1.1.3 and is fantastic...i use it for make the patches for my translations and stuff like these...i was wondering...is it possible to implement a feature that expand the process to all the subdirectories? using a for like this for %%i in (*.*) do xdelta delta "OLD\%%i" "NEW\%%i" "%%i.patch" it doesn't recreate the dir tree and it doesn't take the subdirectories...what do you think about that?

- We are using xdelta3 to compress our backups down at my workplace and it is working excellently, however whichever machine it is run on is put out of commission for the duration due to the xdelta3 executable window popping up for each file. Would it be possible to build the xdelta3 program as a DLL, much like zlib is? I have absolutely no knowledge of C/C++ so I don't know if this is a huge ask or not :)

Thursday, June 28, 2007

No new versions posted since March, so a few updates. One user sent a MSDOS

Several of you have requested a feature for supporting in-place updates, allowing you to apply deltas without making a copy of the file, which brings me to another bit of user feedback:

I have to thank the IETF and previous work in open source (e.g., RFC 1950 – Zlib, RFC 3284 – VCDIFF) for making this possible. Zlib bills itself "A Massively Spiffy Yet Delicately Unobtrusive Compression Library (Also Free, Not to Mention Unencumbered by Patents)", and in fact Zlib inspired Xdelta's API from the start (it's "unobtrusive"). Let's not forget Zlib's other main advantage (it's "unencumbered"). As for the the previous request (in-place updates), interest is strong but patents could become an issue.

Multi-threaded encoding/decoding is another frequent request. The idea is that more CPUs can encode/decode faster by running in parallel over interleaved segments of the inputs. That's future work, and probably a lot of it, but I like the idea.

Xdelta 3.0q has 11,480 downloads. It's you the user that feeds open source, and thanks for the great feedback!

.bat script for xdelta1/xdelta3 command-line compatibility, another sent a perl script for recursive directory diff, one user reports good performance for an in-kernel application (sample code), and some feature requests. Given the lack of bug reports, it's about time to take xdelta3 out of beta.Several of you have requested a feature for supporting in-place updates, allowing you to apply deltas without making a copy of the file, which brings me to another bit of user feedback:

- Firstly, I’d just like to let you know that we did a little benchmark at work and xdelta3 came out on top from several utilities in terms of both execution speed and final output size.

One of those utilities is a 4.5 year old version of RTPatch which we have a license for.

One of my work colleagues emailed Pocket Soft about this, and as well as the obvious “you’re using an old version” response, and “open source software has no support” arguments, they also said something along the lines of: “xdelta likely infringes on our patents as well”, implying that using it may be illegal.

Being a great supporter and user of open source in my spare time, I am totally against this sort of spreading of F.U.D., as well as the very idea that software is patentable.

I’m not sure if my work colleague emailed you as well, but I just thought I’d let you know. :)

I have to thank the IETF and previous work in open source (e.g., RFC 1950 – Zlib, RFC 3284 – VCDIFF) for making this possible. Zlib bills itself "A Massively Spiffy Yet Delicately Unobtrusive Compression Library (Also Free, Not to Mention Unencumbered by Patents)", and in fact Zlib inspired Xdelta's API from the start (it's "unobtrusive"). Let's not forget Zlib's other main advantage (it's "unencumbered"). As for the the previous request (in-place updates), interest is strong but patents could become an issue.

Multi-threaded encoding/decoding is another frequent request. The idea is that more CPUs can encode/decode faster by running in parallel over interleaved segments of the inputs. That's future work, and probably a lot of it, but I like the idea.

Xdelta 3.0q has 11,480 downloads. It's you the user that feeds open source, and thanks for the great feedback!

Sunday, March 25, 2007

Xdelta 3.0q features a new MSI installer for Windows.

Thanks to many of you for your feedback on Windows installation (issues 16, 26, 27). Thanks especially to Nikola Dudar for explaining how to do it.

Release 3.0q fixes Windows-specific issues: (1) do not buffer stderr, (2) allow file-read sharing. Thanks for the feedback!

Thanks for the following build tools:

Windows Installer XML (WiX) toolset

Microsoft Visual C++ 2005 Redistributable Package (x86)

Microsoft Visual C++ 2005 Express Edition

Microsoft Platform SDK for Windows Server 2003 R2

Thanks to many of you for your feedback on Windows installation (issues 16, 26, 27). Thanks especially to Nikola Dudar for explaining how to do it.

Release 3.0q fixes Windows-specific issues: (1) do not buffer stderr, (2) allow file-read sharing. Thanks for the feedback!

Thanks for the following build tools:

Windows Installer XML (WiX) toolset

Microsoft Visual C++ 2005 Redistributable Package (x86)

Microsoft Visual C++ 2005 Express Edition

Microsoft Platform SDK for Windows Server 2003 R2

Tuesday, February 27, 2007

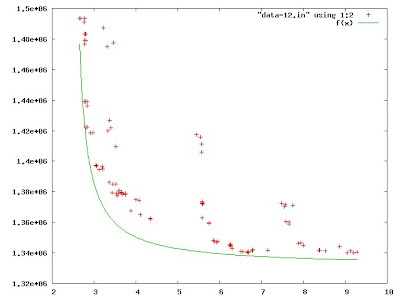

Plot shows the performance of variable compression-level settings, x (time) and y (compressed size), tested on a specific pair of cached 130MB inputs.

Compression has an inverse relation between time and space performance. The green line is a hyperbola for reference, f(x) = 1.33MB + (30KB*s) / (x - 2.45s). Sample points:

1.49MB in 2.9sec at ~45MB/sec (98.9% compression)

1.34MB in 6.5sec at ~20MB/sec (99.0% compression)

Sunday, February 18, 2007

Re: Xdelta 3.0p (download)

Xdelta-3.x processes a window of input at a time, using a fixed memory budget. This release raises some of the default maximums, for better large-file compression. The default memory settings for large files add up to about 100Mb. (*)

A user wrote to confirm good performance on 3.7Gb WIM files, great!

A user writes about code size:

I'm looking for your ideas on recursive directory diff. One solution uses tar:

Thanks for the feedback (file a new issue).

(*) Xdelta-1.x processes the whole input at once, and its data structure takes linear space, (source file size/2)+O(1). Xdelta-1.x reads the the entire source file fully two times (to generate checksums, to match the input), using at least half as much memory as the source file size.

Xdelta-3.x processes a window of input at a time, using a fixed memory budget. This release raises some of the default maximums, for better large-file compression. The default memory settings for large files add up to about 100Mb. (*)

A user wrote to confirm good performance on 3.7Gb WIM files, great!

- So, to answer the question in your post on Jan 28th, yes we are using xdelta3 with files larger than 1Gb and it's working well.

I'm off to try boosting the compression and see how much we can scrunch the patch down. Thank-you for an extremely useful tool that is going to hugely improve our ability to distribute updates to our build images.

A user writes about code size:

- I saw your post where you said you’d improved the compression in Xdelta3 and that it was now slightly faster than 1.1. Do you happen to know where the two stand without compression enabled? (We don’t use Xdelta’s compression because we compress all of our files after the fact with Rar solid compression.) Thanks too for the link to those benchmarks. Those were quite helpful, and it’s cool to see Xdelta come out on top. ;)

I will say this: One nice thing about the new Xdelta is its smaller size! Xdelta 1.1’s exe is 1Mb when not compressed via UPX, whereas Xdelta3 is 100Kb. Nice. :)

I'm looking for your ideas on recursive directory diff. One solution uses tar:

- XDELTA="-s source" tar --use-compress-program=xdelta3 -cf ...

Thanks for the feedback (file a new issue).

(*) Xdelta-1.x processes the whole input at once, and its data structure takes linear space, (source file size/2)+O(1). Xdelta-1.x reads the the entire source file fully two times (to generate checksums, to match the input), using at least half as much memory as the source file size.

Wednesday, February 07, 2007

Users want speed, especially video gamers. I tested with some of your data: Unreal tournament and Wesnoth patches. These patches save 50-100MB per download.

Xdelta1 remains popular today because of speed, and xdelta3 until now hasn't been as fast (debdev has tests). Xdelta-3.0o has improved compression levels (download).

Over my sample data, the new default compression level (same as the command-line flag -1) is a little faster than, with compression roughly the same as Xdelta-1.x. Compression levels -3, -6, and -9 are also improved.

This release also features Swig support, e.g.,

Xdelta1 remains popular today because of speed, and xdelta3 until now hasn't been as fast (debdev has tests). Xdelta-3.0o has improved compression levels (download).

Over my sample data, the new default compression level (same as the command-line flag -1) is a little faster than, with compression roughly the same as Xdelta-1.x. Compression levels -3, -6, and -9 are also improved.

This release also features Swig support, e.g.,

import xdelta3

# memory interface

source = 'source source input0 source source'

target = 'source source target source source'

result1, patch = xdelta3.xd3_encode_memory(target, source, len(target))

result2, target1 = xdelta3.xd3_decode_memory(patch, source, len(target))

assert result1 == 0

assert result2 == 0

assert target1 == target

assert len(patch) < len(target)

# command-line interface

source_file = ...

target_file = ...

command_args = ['xdelta3', '-f', '-s', source_file, target_file, output_file]

xdelta3.xd3_main_cmdline(command_args)

Re: SVN teaser

SVN 125 has a new XDELTA environment variable for passing flags off the command-line, so you can use xdelta3 in conjunction with tar:

SVN 125 has a new XDELTA environment variable for passing flags off the command-line, so you can use xdelta3 in conjunction with tar:

export XDELTA

XDELTA="-s source-1.tar"

TAR="tar --use-compress-program=xdelta3"

$TAR -cvf source-1-source2.tar.vcdiff source-2/

...

$TAR -xvf source-1-source2.tar.vcdiff source-2/file

Thursday, February 01, 2007

Re: Performance

From The Old Joel on Software Forum:

Another post in the same thread writes:

Virtual memory does not ease the space consideration. Reading from disk is terribly slow, so Xdelta3 avoids seeking backwards in the source file during compression. Read more about xdelta3 memory settings.

From The Old Joel on Software Forum:

- I checked out rtpatch and xdelta a little while ago - unless there's something wrong with the demo version rtpatch has on their website, xdelta is significantly faster at making patches, and they are a tiny bit smaller.

The test I ran was on a 200 Meg application, where about 30 megs of random parts of files was different. Rtpatch took around an hour (not sure since I wandered off while waiting) and xdelta took a couple minutes.

We ended up rolling our own system anyhow, because our application has pretty serious requirements on robustness. Maybe we should try selling it?

Another post in the same thread writes:

- I did some research on 'patching' like issues, read some papers on the "Longest Common Subsequence" problem, wrote some prototype code... And we ultimately decided to defer the issue in our product. Note that we weren't trying to patch our software, we were interested in including tools in our product that would allow administrators to build patches for applications they support in house.

Long story short...it's a heavily researched issue in Computer Science, but it's still a difficult problem to solve well. There are both space and time considerations, although running on a 'modern' OS with virtual memory eases the space consideration. After my evaluation, my guess was that RTPatch was in no way producing an optimal solution, but that they were relying on compression to keep the size of the patch small. Their genius lies in being able to generate a patch so quickly...

Virtual memory does not ease the space consideration. Reading from disk is terribly slow, so Xdelta3 avoids seeking backwards in the source file during compression. Read more about xdelta3 memory settings.

Re: SVN 100

If you're keeping up-to-date by subversion, with the xdelta source code, version 100 has a few recent changes: (1) compiles on cygwin (1.x and 3.x), (2) responding to bug report 17.

If you're keeping up-to-date by subversion, with the xdelta source code, version 100 has a few recent changes: (1) compiles on cygwin (1.x and 3.x), (2) responding to bug report 17.

Sunday, January 28, 2007

Re: Xdelta 1.1.4

An especially grateful user wrote me to say thanks for the open-source software:

This is a maintenence release: Xdelta 1.1.4 remains substantially unchanged since 1999. This release fixes a bug: Compressed data from 32-bit platforms failed to decompress on 64-bit platforms. This is fixed in the decoder (it was a badly-designed "hint", now ignored), so you can now read old 32-bit patches on 64-bit platforms. Patches produced by 1.1.4 are still readable by 1.1.3 on the same platform. Still, Xdelta 1.1.x is losing its edge.

Xdelta3 compresses faster and better, using a standardized data format—VCDIFF, and has no dependence on gzip or bzip2. If using a standardized encoding is not particularly important for you, Xdelta3 supports secondary compression options. Xdelta3 (with the -9 -S djw flags) is comparible in terms of compression, but much faster than bsdiff. Xdelta3 includes a Windows .exe in the official release.

As always, I'm interested in your feedback (file a new issue). Are you compressing gigabyte files with Xdelta3? Have you used dfc-gorilla (by the makers of RTPatch)?

An especially grateful user wrote me to say thanks for the open-source software:

- Firstly, Xdelta is an impressive piece of software, and I appreciate you writing it. I've done some tests and found it to be orders of magnitude faster than RTPatch, the expensive commercial diffing tool!

I'm using a Windows ported version of Xdelta 1.1.3 in a project I'm working on here, and it works great. (I realize that XDelta 1.1.3 is old, but I like how fast it is relative to the results). Because that version is licensed under the GPL, I am including a copy of XDelta.exe with the program instead of integrating the code directly. I'm content to continue doing this, as obviously I want to respect your licensing wishes for the software. (*)

- I hope you can release a bugfix and rub off the beautymarks. xdelta-1.1.x is still used widely and a maintenance release would be appreciated.

This is a maintenence release: Xdelta 1.1.4 remains substantially unchanged since 1999. This release fixes a bug: Compressed data from 32-bit platforms failed to decompress on 64-bit platforms. This is fixed in the decoder (it was a badly-designed "hint", now ignored), so you can now read old 32-bit patches on 64-bit platforms. Patches produced by 1.1.4 are still readable by 1.1.3 on the same platform. Still, Xdelta 1.1.x is losing its edge.

Xdelta3 compresses faster and better, using a standardized data format—VCDIFF, and has no dependence on gzip or bzip2. If using a standardized encoding is not particularly important for you, Xdelta3 supports secondary compression options. Xdelta3 (with the -9 -S djw flags) is comparible in terms of compression, but much faster than bsdiff. Xdelta3 includes a Windows .exe in the official release.

As always, I'm interested in your feedback (file a new issue). Are you compressing gigabyte files with Xdelta3? Have you used dfc-gorilla (by the makers of RTPatch)?

Sunday, January 21, 2007

Xdelta3 has a stream-oriented C/C++ interface. The application program can compress and decompress data streams using methods named xd3_encode_input() and xd3_decode_input(). With a non-blocking API, it's about as easy as programming Zlib.

Read about it here.

Thanks for your feedback (file a new issue).

Read about it here.

Thanks for your feedback (file a new issue).

Monday, January 15, 2007

Release 3.0l (download)

This release raises the instruction buffer size and documents the related performance issue. Problems related to setting -W (input window size) especially small or especially large were fixed: the new minimum is 16KB, the new maximum is 16MB. A regression in the unit test was fixed: the compression-level changes in 3.0k had broken several hard-coded test values.

The encoder has compression-level settings to optimize variously for time and space, such as the width of the checksum function, the number of duplicates to check, and what length is considered good enough. There are 10 parameters (Zlib, by comparision, has 4), but the flag which sets them (-C) is undocumented. I am documenting these and developing experiments to find better defaults.

There's a new page about external compression.

Thanks for your feedback (file a new issue).

This release raises the instruction buffer size and documents the related performance issue. Problems related to setting -W (input window size) especially small or especially large were fixed: the new minimum is 16KB, the new maximum is 16MB. A regression in the unit test was fixed: the compression-level changes in 3.0k had broken several hard-coded test values.

The encoder has compression-level settings to optimize variously for time and space, such as the width of the checksum function, the number of duplicates to check, and what length is considered good enough. There are 10 parameters (Zlib, by comparision, has 4), but the flag which sets them (-C) is undocumented. I am documenting these and developing experiments to find better defaults.

There's a new page about external compression.

Thanks for your feedback (file a new issue).

Friday, January 12, 2007

Release 3.0k (download)

This is the first release making only performance improvements, not bug fixes. The default source buffer size has increased from 8 to 64 megabytes, and I've written some notes on tuning memory performance for large files. I've been running experiments to find better compression-level defaults. This release has two default compression levels, fast (-1 through -5) and the default slow (-6 through -9), both of which are faster and better than the previous settings. There's more work to do on tuning in both regards, memory and compression level, but this is a starting point.

There is a new wiki on command line syntax. Thanks for your feedback (file a new issue).

This is the first release making only performance improvements, not bug fixes. The default source buffer size has increased from 8 to 64 megabytes, and I've written some notes on tuning memory performance for large files. I've been running experiments to find better compression-level defaults. This release has two default compression levels, fast (-1 through -5) and the default slow (-6 through -9), both of which are faster and better than the previous settings. There's more work to do on tuning in both regards, memory and compression level, but this is a starting point.

There is a new wiki on command line syntax. Thanks for your feedback (file a new issue).

Sunday, January 07, 2007

Release 3.0j (download)

The self-test (run by

Thanks for your continued feedback (file a new issue). A user reports that

The self-test (run by

xdelta3 test) now passes. There had been a regression related to external-compression, and several tests had to be disabled on Windows. Also fixes VCDIFF info commands on Windows (e.g., xdelta3 printdelta input) and memory errors in the Python module.Thanks for your continued feedback (file a new issue). A user reports that

xdelta3.exe should not depend on the C++ 8.0 Runtime. I agree—this is written in C. The source release includes a .vcproj file, in case you'd like to try for yourself.Saturday, December 16, 2006

Thanks for your feedback. (Submit a new report).

Release 3.0i builds with native Windows I/O routines (enabled by /DXD3_WIN32=1) and has been tested on 64 bit files. (Issue closed).

Windows: download

Source: download

Release 3.0i builds with native Windows I/O routines (enabled by /DXD3_WIN32=1) and has been tested on 64 bit files. (Issue closed).

Windows: download

Source: download

Sunday, December 10, 2006

#include <windows.h>

Ladies and Gents,

Version 3.0h runs on Windows.

Please head straight for the latest download of your choice:

Source

Windows x86-32

OSX PPC

I thought I'd share this first and test it later, let you be the judge.

There are not a lot of platform dependencies. The main() routine has helpful options:

Please file issues here or send mail to <josh.macdonald@gmail.com>.

(Thanks to TortoiseSVN for keeping us in sync.)

Ladies and Gents,

Version 3.0h runs on Windows.

Please head straight for the latest download of your choice:

Source

Windows x86-32

OSX PPC

I thought I'd share this first and test it later, let you be the judge.

There are not a lot of platform dependencies. The main() routine has helpful options:

- /DXD3_STDIO=1 builds with portable I/O routines

- /DEXTERNAL_COMPRESSION=0 builds without external compression, which requires POSIX system calls

The remaining changes were minimal, such as the printf format string for 64bit file offsets. I haven't run a 64bit test on Windows—I was too busy posting this. :-)

static long

get_millisecs_now (void)

{

#ifndef WIN32

struct timeval tv;

gettimeofday (& tv, NULL);

return (tv.tv_sec) * 1000L + (tv.tv_usec) / 1000;

#else

SYSTEMTIME st;

FILETIME ft;

__int64 *pi = (__int64*)&ft;

GetLocalTime(&st);

SystemTimeToFileTime(&st, &ft);

return (long)((*pi) / 10000);

#endif

}

Please file issues here or send mail to <josh.macdonald@gmail.com>.

(Thanks to TortoiseSVN for keeping us in sync.)

To: Microsoft

Re: Windows support

Dear sirs,

Thanks for the free downloads!

Microsoft Visual C++ 2005 Express Edition

Microsoft Platform SDK for Windows Server 2003 R2

Re: Windows support

Dear sirs,

Thanks for the free downloads!

Microsoft Visual C++ 2005 Express Edition

Microsoft Platform SDK for Windows Server 2003 R2

Wednesday, September 27, 2006

KDE.org asked how to use xdelta3. Like gzip with the additional -s SOURCE. Like gzip, -d means to decompress, and the default is to compress. For output, -c and -f flags behave likewise. Unlike gzip, xdelta3 defaults to stdout (instead of having an automatic extension). Without -s SOURCE, xdelta3 behaves like gzip for stdin/stdout purposes. See also.

Compress examples:

xdelta3 -s SOURCE TARGET > OUT

xdelta3 -s SOURCE TARGET OUT

xdelta3 -s SOURCE < TARGET > OUT

Decompress examples:

xdelta3 -d -s SOURCE OUT > TARGET

xdelta3 -d -s SOURCE OUT TARGET

xdelta3 -d -s SOURCE < OUT > TARGET

Compress examples:

xdelta3 -s SOURCE TARGET > OUT

xdelta3 -s SOURCE TARGET OUT

xdelta3 -s SOURCE < TARGET > OUT

Decompress examples:

xdelta3 -d -s SOURCE OUT > TARGET

xdelta3 -d -s SOURCE OUT TARGET

xdelta3 -d -s SOURCE < OUT > TARGET